Projects

BMBF Project:

TriFORCE: Learning adaptive reusable skills for intelligent autonomous agents

PI: Carlo D'Eramo

E-Mail: carlo.deramo@uni-wuerzburg.de

Project duration: 2023-2026

Project description

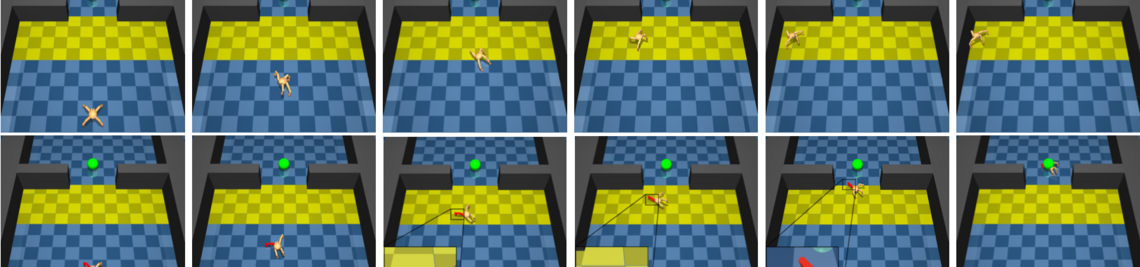

This project will extend the current limits of Deep RL to improve its practicality in realistic high-dimensional problems. Our contributions will be a step forward in unleashing the full potential of Deep RL by making it more efficient and easier to use in real-world environments. We will achieve our goal by addressing the following fundamental problems of Deep RL: Computational and data requirements, human supervision and tuning, and task overfitting. Although agents trained with Deep RL perform excellently, they have a strong tendency to overfit the available data.

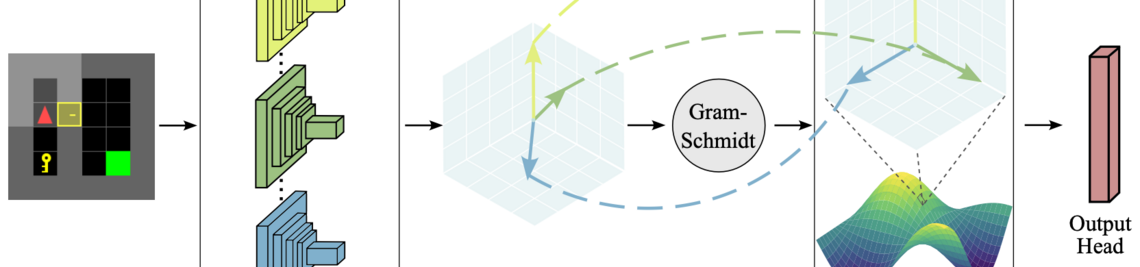

In this project, we will show that these seemingly different problems have similarities and common causes, and we will address them by introducing a new unifying view that will guide our research for more efficient and practical Deep RL methods that can be used in everyday applications.

We will demonstrate the critical importance of our methodological advances in learning locomotion skills in a leg-operated wheelchair. In this application, the wheelchair needs to move robustly and safely at different speeds, avoid obstacles and adapt to different terrains. In addition, the gait pattern should be adapted to the needs and preferences of the human user. We will demonstrate the importance of our algorithmic solutions in a realistic problem where multiple skills are required, such as navigating to a destination, walking up stairs and avoiding obstacles along the way.

The TriFORCE team is made up of outstanding scientists, an advisory board with renowned researchers, female leaders from academia and partners from industry.

Connectom project:

LExRoL: Lifelong Explainable Robot Learning

PI: Carlo D'Eramo

Co-PI: Georgia Chalvatzaki

E-Mail: carlo.deramo@uni-wuerzburg.de, georgia.chalvatzaki@tu-darmstadt.de

Project duration: Mar 2023 - Dec 2023

Project description

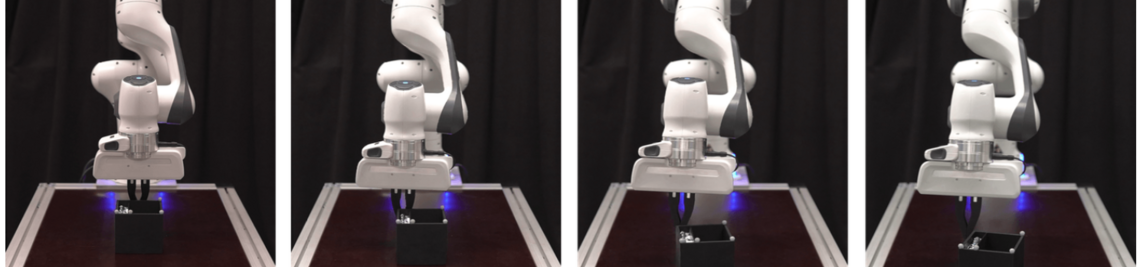

Lifelong robot learning stipulates that an agent can form representations that are useful for learning a series of tasks continually, avoiding catastrophic forgetting of earlier skills. In this project, we study a method that allows robots to learn, through multimodal cues, a series of behaviors that are easily composable for synthesizing more complex behaviors. We propose adapting large pretrained foundation models on language and vision into robotic-oriented tasks. We investigate the design of novel parameter-efficient residuals for lifelong reinforcement learning (RL) that would allow us to build on previous representations for learning new skills while avoiding the two key issues of task interference and catastrophic forgetting. Crucially, we investigate forward and backward transfer, and inference, under the lens of explainability, to enable robots to explain to non-expert users similarities found across tasks throughout their training life and even to map its actions while executing a task into natural language explanations. We argue that explainability is a crucial component for increasing the trustworthiness of AI robots during their interaction with non-expert users, which opens new avenues of research in the field of lifelong RL and robotics.